Openswitch, Docker & Linux Networking - Part 2: Build a Network

EDIT 01Nov2016

It looks like openswitch is going through a reboot of sorts, hard, soft, whatever, I'm not sure how relevant this information is anymore. I'll leave it up as an archive but be warned that this may be as irrelevant as the Lucas plot points for Episode 7.

In this post I'll be covering how to build an Openswitch network using docker and some linux networking tricks.

Hopefully you have already read through Part 1. TL;DR? You need an Openswitch image in a docker container for this part. Sure, you can get an OVA and play with that, or use mininet with your containers, but we are going to have some fun peaking underneath the docker network command and uncovering a veth pair or two.

Before I started labbing this stuff up I watched a preso by Kelsey Hightower at the recent devops4networking event. The gist of it was that container networking is just linux networking, everything would be easier if we just acknowledge this and stop apply new terms to the fundamentals. For example, docker's phrase 'multi-host networking'. Huh? So, in other words, just networking then guys.

My point is, when I started out I thought I was going to be learning all about docker networking, but then I realised that in fact I needed to catch up with the basics of linux networking.

In the words of Monty Python:

'Get on with it!'

Build the Openswitch network

So you've got your openswitch image up and running in a container, logged in and played around with the CLI. All good fun. Now to build up the network.

Multiple openswitch containers are really easy to build, just enter the docker run command a few times with different names. N.B. I'm using my 'joeneville/ops' repository on docker hub for my image:

joe@u14-4:~$ docker run --privileged -v /tmp:/tmp -v /dev/log:/dev/log -v /sys/fs/cgroup:/sys/fs/cgroup -h ops1 --name ops1 joeneville/ops:latest /sbin/init &

[1] 13951

joe@u14-4:~$ docker run --privileged -v /tmp:/tmp -v /dev/log:/dev/log -v /sys/fs/cgroup:/sys/fs/cgroup -h ops2 --name ops2 joeneville/ops:latest /sbin/init &

[2] 15167

joe@u14-4:~$ docker run --privileged -v /tmp:/tmp -v /dev/log:/dev/log -v /sys/fs/cgroup:/sys/fs/cgroup -h ops3 --name ops3 joeneville/ops:latest /sbin/init &

[3] 17383

joe@u14-4:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3d1619936bad joeneville/ops:latest "/sbin/init" 2 seconds ago Up 1 seconds ops3

977fb02c1896 joeneville/ops:latest "/sbin/init" 8 seconds ago Up 7 seconds ops2

6f94072f5b0b joeneville/ops:latest "/sbin/init" 15 seconds ago Up 14 seconds ops1

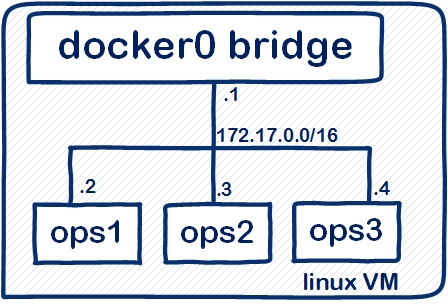

Beautiful. Now, if you are following along, you should have guessed that all three containers will be connected to the docker0 bridge network:

joe@u14-4:~$ docker network inspect bridge | egrep 'Name|IPv4Address'

"Name": "bridge",

"Name": "ops3",

"IPv4Address": "172.17.0.4/16",

"Name": "ops1",

"IPv4Address": "172.17.0.2/16",

"Name": "ops2",

"IPv4Address": "172.17.0.3/16",

That was easy wasn't it?

But hold on! We want an openswitch network not a docker network. We need to go deeper.

On to Openswitch

Log in to one of the containers, ops1 will do, take a look at the interfaces:

joe@u14-4:~$ ssh root@172.17.0.2

root@switch:~# ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe11:2/64 scope link

valid_lft forever preferred_lft forever

There you can see the eth0 int attached to the docker0 bridge. This is a bit like the management interface on a device. If you think about how networkers normally build stuff in the lab, it isn't the mgmt port they use to attach switches together, it's port 1/1 on the front panel.

How do we get access to the openswitch ports and get them connected up?

Log into openswitch with vtysh and do a show interface brief:

root@switch:~# vtysh

switch# show interface brief

--------------------------------------------------------------------------------

Ethernet VLAN Type Mode Status Reason Speed Port

Interface (Mb/s) Ch#

--------------------------------------------------------------------------------

bridge_normal -- eth routed up auto --

1 -- eth routed down auto --

2 -- eth routed down auto --

3 -- eth routed down auto --

4 -- eth routed down auto --

I've truncated the output, there are over 50 ports. It is these that we need to connect up.

Open up int 1 and examine it:

switch# conf t

switch(config)# int 1

switch(config-if)# no shut

switch(config-if)# exit

switch(config)# exit

switch# sh int 1

Interface 1 is up

Admin state is up

Great so the interface is up / up. As a networker that is music to my ears. I'm thinking the Cat6 is all connected up and we are ready to crack on with config.

But we're are in the ethereal world of virtual networks here, not solid copper cables. Like some Matrix construct thingy.

If the interface is up, what does that mean and how can we connect two of these ports together even though they are on different openswitches on different containers?

It seems logical to me that the place to start with connecting the openswitches together is to connect up the containers. We have our mgmt network with docker0, why not just create more of these kind of networks connect ops1, 2 & 3 together. That's one step in the right direction, but far from the whole story.

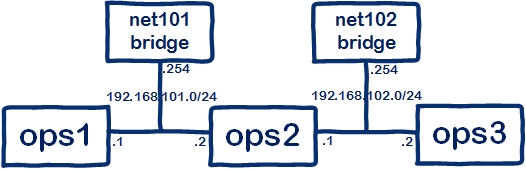

docker network

The docker network command does indeed allow us to build our own networks and attach containers to them. The following set of commands will build two networks and attach the containers to them. Remember these need to be executed on the linux shell of your host so drop out of openswitch and the container first.

#create two networks, define the addressing, gateway and name

docker network create --subnet=192.168.101.0/24 --gateway=192.168.101.254 net101

docker network create --subnet=192.168.102.0/24 --gateway=192.168.102.254 net102

#connect the containers to the network

docker network connect net101 ops1

docker network connect net101 ops2

docker network connect net102 ops2

docker network connect net102 ops3

Log into one of the containers and we can see the new interfaces, ops2 is a good candidate because it sits on two networks:

joe@u14-4:~$ ssh root@172.17.0.3

root@switch:~# ip add | egrep eth

10: eth0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 scope global eth0

18: eth1@if19: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c0:a8:65:02 brd ff:ff:ff:ff:ff:ff

inet 192.168.101.2/24 scope global eth1

20: eth2@if21: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c0:a8:66:01 brd ff:ff:ff:ff:ff:ff

inet 192.168.102.1/24 scope global eth2

Log out back to your linux host and check the docker network inspect command for the new networks:

joe@u14-4:~$ docker network inspect net101 | egrep 'Name|Gateway|IPv4Add'

"Name": "net101",

"Gateway": "192.168.101.254"

"Name": "ops1",

"IPv4Address": "192.168.101.1/24",

"Name": "ops2",

"IPv4Address": "192.168.101.2/24",

Originally I thought the network I was creating would look like this:

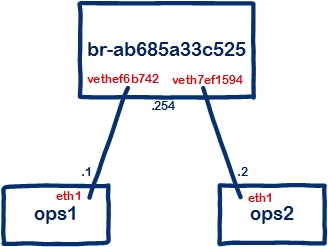

But now check out an ip addr on your linux host and things look a lot more complicated.

Peak behind the curtain

Here my ip addr:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

inet 127.0.0.1/8 scope host lo

2: em1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 192.168.1.253/24 brd 192.168.1.255 scope global em1

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

inet 172.17.0.1/16 scope global docker0

9: veth041f711@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

11: vethaf570b8@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

13: veth5f72fa5@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

14: br-ab685a33c525: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

inet 192.168.101.254/24 scope global br-ab685a33c525

15: br-36c087299e82: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

inet 192.168.102.254/24 scope global br-36c087299e82

17: vethef6b742@if16: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-ab685a33c525 state UP group default

19: veth7ef1594@if18: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-ab685a33c525 state UP group default

21: veth2177ec2@if20: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-36c087299e82 state UP group default

23: vethb8f0c18@if22: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-36c087299e82 state UP group default

That does seem pretty complex and not what I expected.

Picking through this:

- We have the lo, em1 & docker0 bridge.

- There are the net101 & net102 bridges, here called 'br-ab685a33c525' and 'br-36c087299e82' respectively.

- The rest are veth interfaces, these connect to the bridges, their description details which bridge. Three are connected to docker0, two to net101 bridge, and two to net102 bridge.

veth interfaces - what are they? Well they come in pairs and they are like pipes, whatever traffic goes in on one veth, comes out its neighbour veth in the pair.

If we've got a bunch of veth interfaces on our new linux bridges and they come in pairs, where are the other ends? On the containers of course.

Use ethtool to link the interface index numbers of each end[1]:

#take one of the new veth interfaces as an example

17: vethef6b742@if16: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-ab685a33c525 state UP group default

#run ethtool on the veth name

joe@u14-4:~$ ethtool -S vethef6b742

NIC statistics:

peer_ifindex: 16

#log into ops1

joe@u14-4:~$ ssh root@172.17.0.2

#check ip add and observe the interface index, 16 in this case

root@switch:~ ip add | grep eth1

16: eth1@if17: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

inet 192.168.101.1/24 scope global eth1

#To confirm, run ethtool on this end

root@switch:~ ethtool -S eth1

NIC statistics:

peer_ifindex: 17

The docker network command, when creating a network entity, actually creates a linux bridge. When we connect containers to this new network, we are actually creating veth interface pairs from the bridge to the containers. That is how our virtual infrastructure is built up. If we want to ping from one container to another the packets travel across the local veth interface pair to the linux bridge then down the other veth pair.

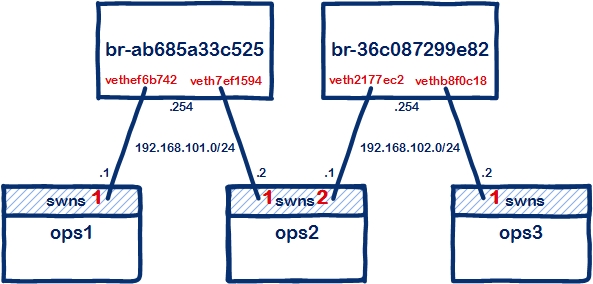

Ok, now we are getting closer and we can visualize the component pieces of the puzzle with veth interfaces instead of cables connecting our containers together. But this still has not answered how we can reach further in and connect up the openswitch interfaces. For that we need to jump into network namespaces.

Enter Network Namespaces

What is a network namespace? Everyone says it is a bit like a VRF but not really. I think initially it is helpful to think of them as VRFs because that gives us network drones a handle on the concept. Linux uses namespaces to isolate all kinds of processes, including those of the network variety. Network namespaces kind of feel like VRFs because:

- They have their own routing table and interfaces.

- You input namespace specific commands, to do an

ip addrin a namespace you runip netns exec <namespace> ip addr - If you move an interface from one namespace to another it loses its IP address just like...well you get the idea.

Why are we concerned with namespaces at this point?

- Openswitch interfaces sit in their own namespace. Log into ops1 container and run

ip netns list. The openswitch interfaces sit in swns. - veth interfaces can be used to connect network namespaces together or to the outside world.

- to connect up our openswitch nodes we need to move our veth interface on the container into the openswitch namespace.

- veth ints come in pairs and we only move one end, so effectively we now have one end on the linux bridge and one in the openswitch namespace. Do that for both containers and openswitch connectivity shall be your reward.

- Final point - how do we know which port on openswitch connects to the veth int we are moving into the namespace? Good point, we just name the interface the same as the openswitch port name before the move. Confused? Don't worry, just keep reading, it works!

Here is the process steps followed by a capture of the commands:

- SSH to an openswitch container, ops1 for example.

- Shutdown the interface created by docker network.

- Rename the interface so that it matches the openswitch port E.G 1.

- Move this interface into the openswitch namespace with

ip link set <int_name> netns <namespace_name>

- Log in to openswitch and configure port 1

- Repeat on a neighbouring container and ping!

#ssh to an openswitch container, ops1 for example.

joe@u14-4:~$ ssh root@172.17.0.2

#shutdown the interface created by docker network

root@switch:~ ip link set eth1 down

#rename the interface so that it matches the openswitch port

root@switch:~ ip link set eth1 name 1

#move this interface into the openswitch namespace of swns

root@switch:~ ip link set 1 netns swns

#log in to openswitch and configure port 1

root@switch:~ vtysh

switch# conf t

switch(config) hostname ops1

ops1(config) int 1

ops1(config-if) ip add 192.168.101.1/24

#repeat on ops2 and ping

joe@u14-4:~$ ssh root@172.17.0.3

root@switch:~ ip link set eth1 down

root@switch:~ ip link set eth1 name 1

root@switch:~ ip link set 1 netns swns

root@switch:~ vtysh

switch> conf t

switch(config) hostname ops2

ops2(config) int 1

ops2(config-if) ip add 192.168.101.2/24

ops2(config-if) ^Z

ops2> ping 192.168.101.1

PING 192.168.101.1 (192.168.101.1) 100(128) bytes of data.

108 bytes from 192.168.101.1: icmp_seq=1 ttl=64 time=0.102 ms

--- 192.168.101.1 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 3999ms

rtt min/avg/max/mdev = 0.051/0.065/0.102/0.018 ms

It works! We can now build up the rest of our network using the same method, here's a visual depiction of my desired end state at the linux host level:

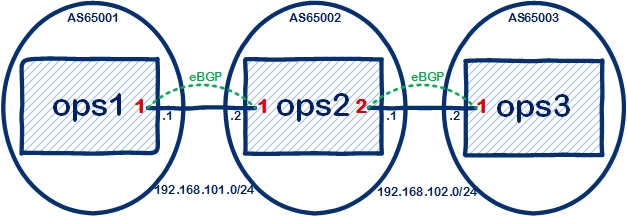

Here is the same but at the openswitch level, just a simple three node network running eBGP:

Here are the commands, linux and openswitch from scratch:

#ops1 linux shell

ip link set eth1 down

ip link set eth1 name 1

ip link set 1 netns swns

#ops1 openswitch

hostname ops1

router bgp 65001

bgp router-id 192.168.101.1

network 192.168.101.0/24

neighbor 192.168.101.2 remote-as 65002

interface 1

no shutdown

ip address 192.168.101.1/24

#ops2 linux shell

ip link set eth1 down

ip link set eth1 name 1

ip link set 1 netns swns

ip link set eth2 down

ip link set eth2 name 2

ip link set 2 netns swns

#ops2 openswitch

hostname ops2

interface 1

no shutdown

ip address 192.168.101.2/24

interface 2

no shutdown

ip address 192.168.102.1/24

router bgp 65002

bgp router-id 192.168.101.2

neighbor 192.168.101.1 remote-as 65001

neighbor 192.168.102.2 remote-as 65003

#ops3 linux shell

ip link set eth1 down

ip link set eth1 name 1

ip link set 1 netns swns

#ops3 openswitch

hostname ops3

interface 1

no shutdown

ip address 192.168.102.2/24

router bgp 65003

bgp router-id 192.168.102.2

neighbor 192.168.102.1 remote-as 65002

network 192.168.102.0/24

#check ops1 BGP table for the local and remote prefix

ops1# sh ip bgp

Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

i internal, S Stale, R Removed

Origin codes: i - IGP, e - EGP, ? - incomplete

Local router-id 192.168.101.1

Network Next Hop Metric LocPrf Weight Path

*> 192.168.101.0/24 0.0.0.0 0 0 32768 i

*> 192.168.102.0/24 192.168.101.2 0 0 32768 65002 65003 i

Total number of entries 2

#ping ops3

ops1# ping 192.168.102.2

PING 192.168.102.2 (192.168.102.2) 100(128) bytes of data.

108 bytes from 192.168.102.2: icmp_seq=1 ttl=63 time=0.089 ms

--- 192.168.102.2 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 3999ms

rtt min/avg/max/mdev = 0.076/0.082/0.089/0.009 ms

Here comes success!

Where Next?

Through writing these openswitch posts I feel like I've learnt a lot but that I'm only just getting started. I hope you can appreciate how exciting the openswitch project is and I've given you an insight into how to get up and running. But, also, I hope you can see that there are a range of opportunities presented by openswitch. You can approach it with a networker's hat on and just build up networks, play around with the openswitch CLI, if you find bugs at this level or weird command outputs the openswitch community would like to hear about it.

However, far greater are the opportunities to delve into a brave new world of linux & software dev in which the openswitch project exists. With this project you have a chance to step beyond networks and CLI, to see how open source projects operate. If that too alien for you, the openswitch guys are planning to make not just a fully-feature network OS but a real cutting edge feature set, embracing new and exciting developments in networking. That's openswitch, a network OS for the DevOps age.

Notice on the container the interface appears as a standard interface not a veth pair, though I'm currently unsure why. ↩︎